Vision Transformers: Rethinking Attention For Fine-Grained Detail

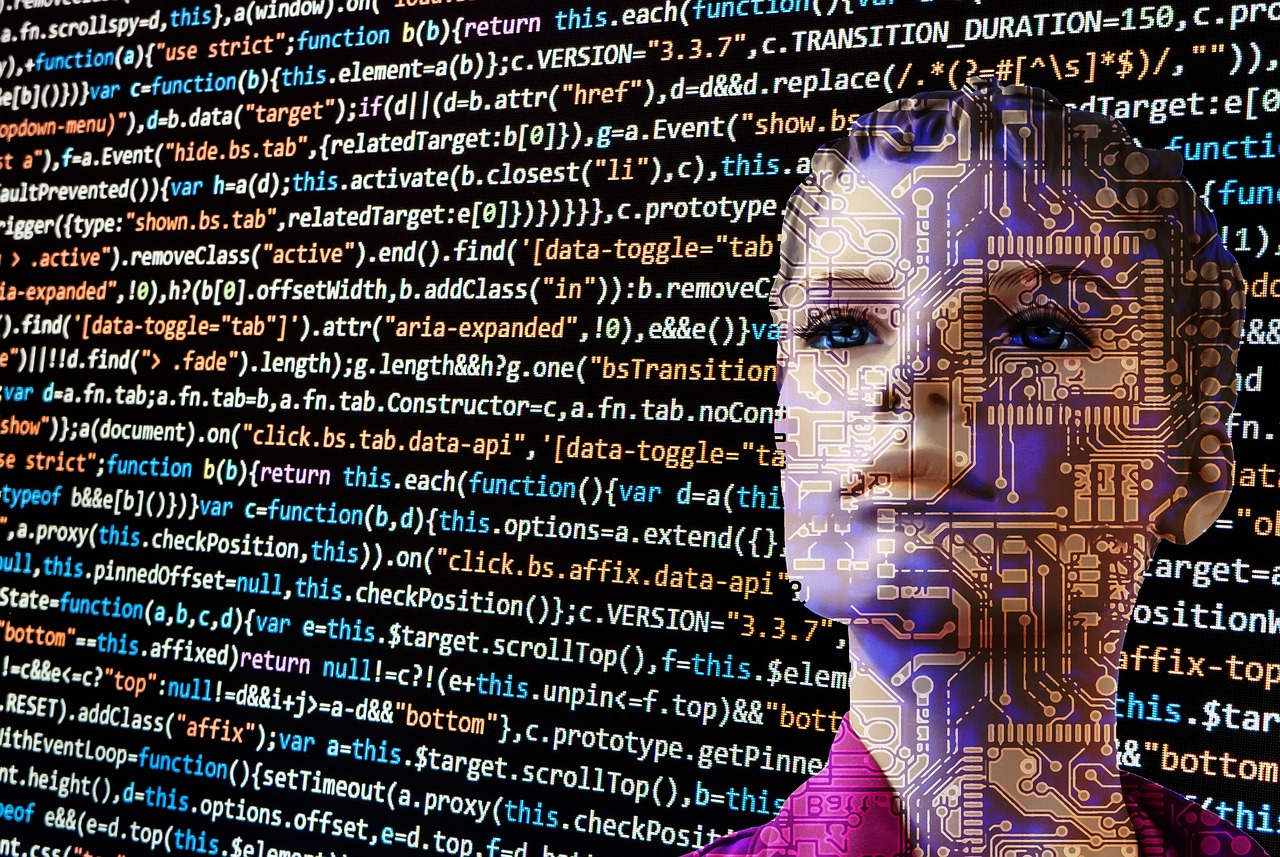

Vision Transformers (ViTs) are revolutionizing the field of computer vision, offering a fresh perspective on how machines "see" and interpret images. Departing from the traditional reliance on convolutional neural networks (CNNs), ViTs apply the transformer architecture – originally designed for natural language processing – to image recognition tasks. This innovative approach is yielding impressive results, often surpassing the performance of their CNN counterparts, and opening up exciting new avenues for research and applications in various industries.

What are Vision Transformers?

The Transformer Architecture: A Quick Recap

Vision Transformers are built upon the transformer architecture, which relies on a self-attention mechanism. Unlike CNNs that process images through layers of filter...